Here it is

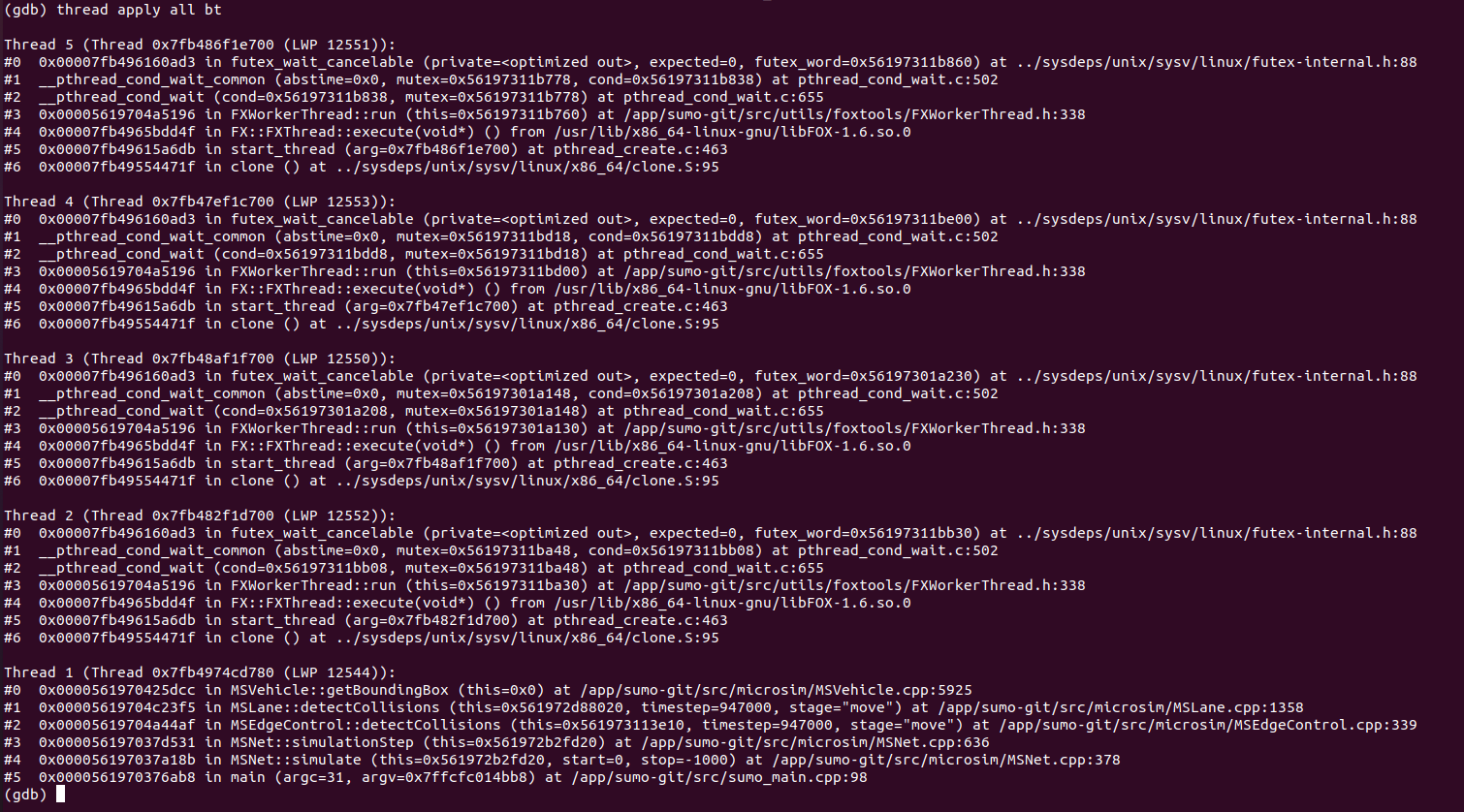

(gdb) thread apply all bt

Thread 5 (Thread 0x7fb486f1e700 (LWP 12551)):

#0 0x00007fb496160ad3 in futex_wait_cancelable

(private=<optimized out>, expected=0,

futex_word=0x56197311b860) at

../sysdeps/unix/sysv/linux/futex-internal.h:88

#1 __pthread_cond_wait_common (abstime=0x0,

mutex=0x56197311b778, cond=0x56197311b838) at

pthread_cond_wait.c:502

#2 __pthread_cond_wait (cond=0x56197311b838,

mutex=0x56197311b778) at pthread_cond_wait.c:655

#3 0x00005619704a5196 in FXWorkerThread::run

(this=0x56197311b760) at

/app/sumo-git/src/utils/foxtools/FXWorkerThread.h:338

#4 0x00007fb4965bdd4f in FX::FXThread::execute(void*) ()

from /usr/lib/x86_64-linux-gnu/libFOX-1.6.so.0

#5 0x00007fb49615a6db in start_thread (arg=0x7fb486f1e700)

at pthread_create.c:463

#6 0x00007fb49554471f in clone () at

../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 4 (Thread 0x7fb47ef1c700 (LWP 12553)):

#0 0x00007fb496160ad3 in futex_wait_cancelable

(private=<optimized out>, expected=0,

futex_word=0x56197311be00) at

../sysdeps/unix/sysv/linux/futex-internal.h:88

#1 __pthread_cond_wait_common (abstime=0x0,

mutex=0x56197311bd18, cond=0x56197311bdd8) at

pthread_cond_wait.c:502

#2 __pthread_cond_wait (cond=0x56197311bdd8,

mutex=0x56197311bd18) at pthread_cond_wait.c:655

#3 0x00005619704a5196 in FXWorkerThread::run

(this=0x56197311bd00) at

/app/sumo-git/src/utils/foxtools/FXWorkerThread.h:338

#4 0x00007fb4965bdd4f in FX::FXThread::execute(void*) ()

from /usr/lib/x86_64-linux-gnu/libFOX-1.6.so.0

#5 0x00007fb49615a6db in start_thread (arg=0x7fb47ef1c700)

at pthread_create.c:463

#6 0x00007fb49554471f in clone () at

../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 3 (Thread 0x7fb48af1f700 (LWP 12550)):

#0 0x00007fb496160ad3 in futex_wait_cancelable

(private=<optimized out>, expected=0,

futex_word=0x56197301a230) at

../sysdeps/unix/sysv/linux/futex-internal.h:88

#1 __pthread_cond_wait_common (abstime=0x0,

mutex=0x56197301a148, cond=0x56197301a208) at

pthread_cond_wait.c:502

#2 __pthread_cond_wait (cond=0x56197301a208,

mutex=0x56197301a148) at pthread_cond_wait.c:655

#3 0x00005619704a5196 in FXWorkerThread::run

(this=0x56197301a130) at

/app/sumo-git/src/utils/foxtools/FXWorkerThread.h:338

#4 0x00007fb4965bdd4f in FX::FXThread::execute(void*) ()

from /usr/lib/x86_64-linux-gnu/libFOX-1.6.so.0

#5 0x00007fb49615a6db in start_thread (arg=0x7fb48af1f700)

at pthread_create.c:463

#6 0x00007fb49554471f in clone () at

../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 2 (Thread 0x7fb482f1d700 (LWP 12552)):

#0 0x00007fb496160ad3 in futex_wait_cancelable

(private=<optimized out>, expected=0,

futex_word=0x56197311bb30) at

../sysdeps/unix/sysv/linux/futex-internal.h:88

#1 __pthread_cond_wait_common (abstime=0x0,

mutex=0x56197311ba48, cond=0x56197311bb08) at

pthread_cond_wait.c:502

#2 __pthread_cond_wait (cond=0x56197311bb08,

mutex=0x56197311ba48) at pthread_cond_wait.c:655

#3 0x00005619704a5196 in FXWorkerThread::run

(this=0x56197311ba30) at

/app/sumo-git/src/utils/foxtools/FXWorkerThread.h:338

#4 0x00007fb4965bdd4f in FX::FXThread::execute(void*) ()

from /usr/lib/x86_64-linux-gnu/libFOX-1.6.so.0

#5 0x00007fb49615a6db in start_thread (arg=0x7fb482f1d700)

at pthread_create.c:463

#6 0x00007fb49554471f in clone () at

../sysdeps/unix/sysv/linux/x86_64/clone.S:95

Thread 1 (Thread 0x7fb4974cd780 (LWP 12544)):

#0 0x0000561970425dcc in MSVehicle::getBoundingBox

(this=0x0) at /app/sumo-git/src/microsim/MSVehicle.cpp:5925

#1 0x00005619704c23f5 in MSLane::detectCollisions

(this=0x561972d88020, timestep=947000, stage="move") at

/app/sumo-git/src/microsim/MSLane.cpp:1358

#2 0x00005619704a44af in MSEdgeControl::detectCollisions

(this=0x561973113e10, timestep=947000, stage="move") at

/app/sumo-git/src/microsim/MSEdgeControl.cpp:339

#3 0x000056197037d531 in MSNet::simulationStep

(this=0x561972b2fd20) at

/app/sumo-git/src/microsim/MSNet.cpp:636

#4 0x000056197037a18b in MSNet::simulate

(this=0x561972b2fd20, start=0, stop=-1000) at

/app/sumo-git/src/microsim/MSNet.cpp:378

#5 0x0000561970376ab8 in main (argc=31,

argv=0x7ffcfc014bb8) at /app/sumo-git/src/sumo_main.cpp:98

(gdb)