Xpect: Easy Testing and Reviews for Xtext Languages

Xpect is a framework that helps you to specify, test and discuss Xtext languages.

Xpect has been around for a while on GitHub. The first announcement is actually from 2013. Since then, even though there was never much marketing behind Xpect, it has seen a spectacular adoption among Xtext-projects. A large one, N4JS, has recently moved to the Eclipse Foundation.

Now we are happy to announce the next step: Xpect is becoming an Eclipse project. As of writing this, the creation review is imminent.

Before I start explaining what Xpect is exactly, I would like to describe the context in which it is useful: Create Xtext languages. Eclipse Xtext makes it very easy to implement smart editors, code generators, or compilers for your existing or self-designed languages. The smart editors offers most of the nice features you probably love from Eclipse Java Tooling: Semantic Highlighting, Content Assist, Live-Validation, Outline, Refactoring, etc. Also, there is Incremental Building, a global Index, and much more. Now when you and your team implement an Xtext languages, you are in fact working on the grammar file, the ScopeProvider to define how cross references are resolved, the validator, the generator, etc. During this work you typically:

- want to have automated tests. Tests give you this blissful peace of mind when refactoring your code and when rolling out the next release.

- review language syntax, semantics, and tooling behavior. Often, the review should be done by a domain expect who may not be a Java programmer.

- set up test stubs to make it easy for fellow developers to implement tests.

Xpect supports these use cases by embedding tests and test-expectations inside comments inside documents of your language. Basically, you create a an example document written in your Xtext-language and at any point you can put in a comment, saying, for example, "here I expect a a validation error", "I expect the next cross reference to link to xyz". I will explain the actual syntax later in this article. With this approach, Xpect is based on the following principles:

- Separate test implementation and test data+expectation. This is great to have domain experts review your tests without the needing to understand Java or Xtext's API.

- Fast test creation. Since a test is in fact a document written in your Xtext language, you can simply use the Xtext editor of your language to create tests. Additionally, there is special editor that combines support for your language with Xpect-syntax-support.

- Tests should be self-explanatory on failures. You, as a developer, break tests regularly when you refactor the underlying code. Good tests really make a difference when a failing tests immediately lets you understand the cause of the problem. Tests that require you to launch a debugger to unveil the problem are a time-sink.

- Tests should run fast. Almost all tests can run without OSGi/SWT and thus launch and finish in milliseconds. Furthermore, they may use a shared setup. This allows tests to run tremendously fast and developers are actually running them locally, because that's faster than triggering a Jenkins job.

- It's OK when test expectations are a bit verbose. This helps understanding them and makes good documentation. If you're worried about having slightly redundant tests that break more often than necessary: That's not a problem since the tests are easy to fix with the "Synchronize Expectation and Actual Test Result" mechanism. Of course, this not an absolute statement and a good sense for "the right amount" is needed.

- Self-contained tests. Self-contained tests are easier to understand tests: Both on failure and for domain experts, because no exploration in other files or methods is needed to gather all relevant information.

- No premature test failures. Asserting a test result should happen with a single Assert-Statement, if possible. Unit tests in which a failing assertion prevents subsequent assertions from being executed can be unnecessarily hard to analyze.

One File, Two Languages

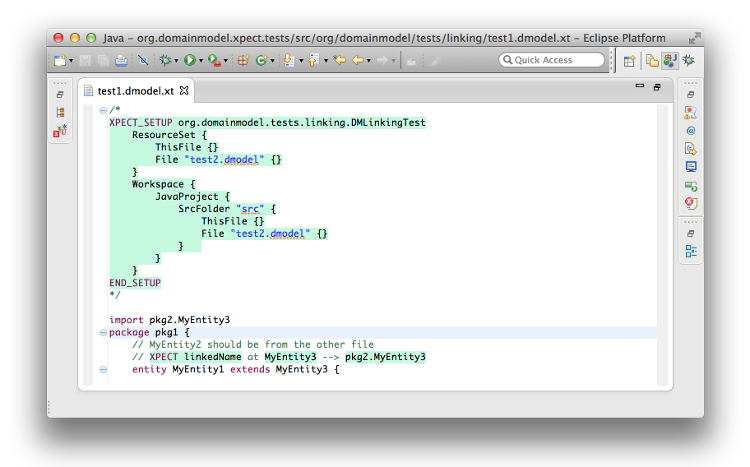

What you can see in the screen-cast above is a JUnit test that has been executed and which passed. The test class is org.domainmodel.tests.validation.DMValidationTest and it has executed a file named test1.dmodel.xt. The file defines two test cases, one called warnings and one called errors. On the right hand side you can see the file's contents in an editor. The editor generically combines support for two languages: First, the language that is being tested. In the screenshot this is the Domainmodel language (*.dmodel) which ships with Xtext as an example language. Secondly, the editor supports the Xpect language (*.xt). The two languages do not interfere with each other since the Domainmodel language ignores text insides comments and the Xpect language ignores text that doesn't start with an "XPECT" keyword. The editor applies a greenish background color to Xpect syntax.

Let's take a closer look at the Xpect syntax. At the beginning of test1.dmodel.xt, there is a region called XPECT_SETUP. It holds a reference to the JUnit test that can run this file. Further down we find test cases such as:

// capitalized property names are discouraged

// XPECT warnings --> "Name should start with a lowercase" at "Property1"

Property1 : String

The first line is an optional title for the test. The warnings references is a JUnit test method (implemented in Java) and the part following the --> is the test expectation, which is passed as a parameter into the JUnit test method. The test method can then compare the test expectation with what it calculated to be the actual test value and pass or fail accordingly. For this example, the test expectation is composed of the error/warning message (Name should start with a lowercase) and the text that would be underlined by the red curly line in the editor (Property1). For this validation test, the XPECT statement collects all errors or warning occurring in the next line. A feature you might find very useful, is, that the XPECT statement consumes error or warning markers: An expected error or warning will not be shown as an error marker in the Eclipse editor.

Synchronize Expectations and Implementation

The fact that Xpect uses textual expectations and embeds them into DSL documents opens the door for another awesome (IMHO) feature: Using the Eclipse comparison editor to inspect failed tests and to fix out-dated test expectations:

When one or more tests fail and you want to fix them, it is crucial to quickly get an overview over all failed tests. With Xpect you can not only see all failed test cases from one file in a single comparison editor, but there are also no assert statements which sometimes prevent execution of follow-up assert statements and thereby hide valuable hints on why the test failed. The comparison editor, as the name suggests, also lets you edit the test file.

With this approach you can still start test-first in the spirit of test-driven-development. However, later, when your implementation is actually running, a second phase begins: Your implementation offers suggestion on how the test expectation should look like. It is your job review this suggestion very carefully, and then, maybe, accept it as the better expectation.

Reusable Test Library

In Xtext projects, there are several scenarios where it is reasonable to have test coverage. The validation test I explained earlier in this article is just one of these scenarios. Xpect ships the Java-part for such tests as a library. There is also an example project that demonstrates their usage.

There are test for:

- The parser and Abstract Syntax Tree (AST) structure (demo only, no library).

- Code generators implemented via Xtext's IGenerator interface.

- Validation: Test for absence, presence, message and location of errors and warnings.

- Linking: Verify a cross reference resolved to the intended model element

- Scoping: Verify the expected names are included or excluded from a cross references's scope.

- ResourceDescriptions: Verify a document exports the intended model elements with proper names.

- JvmModelInferrer: For languages using Xbase, test the inferred JVM model

There will be more tests in future versions of Xpect.

Support for Standalone and Workspace Tests

UI-independent parts of Xtext, such as the parser, can operate standalone (i.e. without OSGi and Eclipse Workspace). The same is true for Xpect. For capabilities where Xtext does not require OSGi or an Eclipse Workspace, Xpect does not do so either. Consequently, Xpect tests can be executed as plain JUnit test or Plug-In JUnit tests.

Since both scenarios require different kinds of setups, both can be configured separately in the XPECT_SETUP section. When executed as plain JUnit test, the ResourceSet-configuration is used and for Plug-In JUnit tests, the Workspace-Configuration is used.

The screenshot also illustrates how an additional file can be loaded so that it is included in the current ResourceSet or Workspace during test execution.

Suites: Combine Tests in the Same File

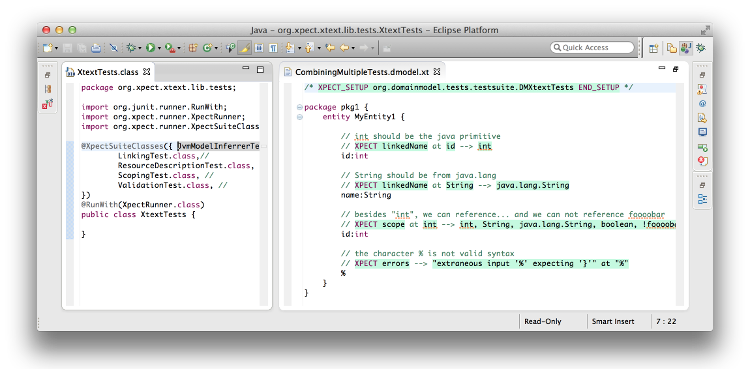

When explaining a specific concept of a language, it is helpful to look at the language concept from all sides: "This is the valid syntax", "these scenarios are disallowed", "this is how cross references resolve", "this is how it is executed", etc. So far, testers were required to create a new test file for every technical aspect. With Xpect test suites, however, it is possible to combine the Java-parts of various tests into a single test suite.

The test suite XtextTests combines several tests so that in CombiningMultipleTests.dmodel.xt test methods from all these tests can be used. This allows to group tests by language concept.

Works with CI Builds

Xpect tests have proven to run fine with Gradle, Maven, Tycho, Surefire, and Jenkins. No special integration is necessary since Xpect tests run as JUnit tests.

Source & Download

You can find a link to the update site at www.xpect-tests.org. The source code and an issue tracker are on the GitHub project page.

About the Author